BridgTime Whitepaper

• Do not modify PG/card settlement rules or cadences. BridgTime is an adjacent verification/attestation layer

that augments, not interrupts, existing payment and settlement flows.

• Record only minimal metadata on-chain (hash, grade, status, signature, timestamp). Originals and any PII-like

data remain encrypted off-chain; the chain functions as an immutable audit index and receipt.

• Make every state transition reproducible (who / when / basis) via signatures, CIDs, and timestamps so

equivalent inputs yield equivalent verification.

• Standardize to a machine-readable RVA schema so reconciliation, underwriting, and monitoring can be automated

(no more spreadsheet or screenshot parsing).

• Security & compliance by default: KMS/HSM-backed key management, RBAC/ABAC, mTLS plus request signing, and

end-to-end audit trails.

BridgTime deliberately separates off-chain data and risk computation from a minimal on-chain attestation

layer.

Full PG settlement feeds, merchant identifiers, chargebacks, and model features stay in the off-chain data lake,

behind KYC/AML and data protection controls.

On-chain, BridgTime writes only small, standardized RVA attestations:

• a pseudonymous RVA key or batch identifier (hash or UUID),

• current status (for example, CREATED, VERIFIED, SHARED, UPDATED, REVOKED, EXPIRED),

• coarse risk bands and validity windows, and

• references to off-chain reports or encrypted payloads.

Pushing full receivable detail on-chain would quickly collide with:

1) regulatory and privacy obligations (PCI, data protection, right-to-erasure),

2) settlement scale and gas cost (millions of rows per merchant per month), and

3) the need to evolve schemas and risk models without hard-forking the network.

Instead, the chain acts as a high-integrity index: it records which batches have been verified, under which

rules, and with which signer set. LFPs and merchants can then combine on-chain status with off-chain risk reports

and contracts to make credit and prepayment decisions.

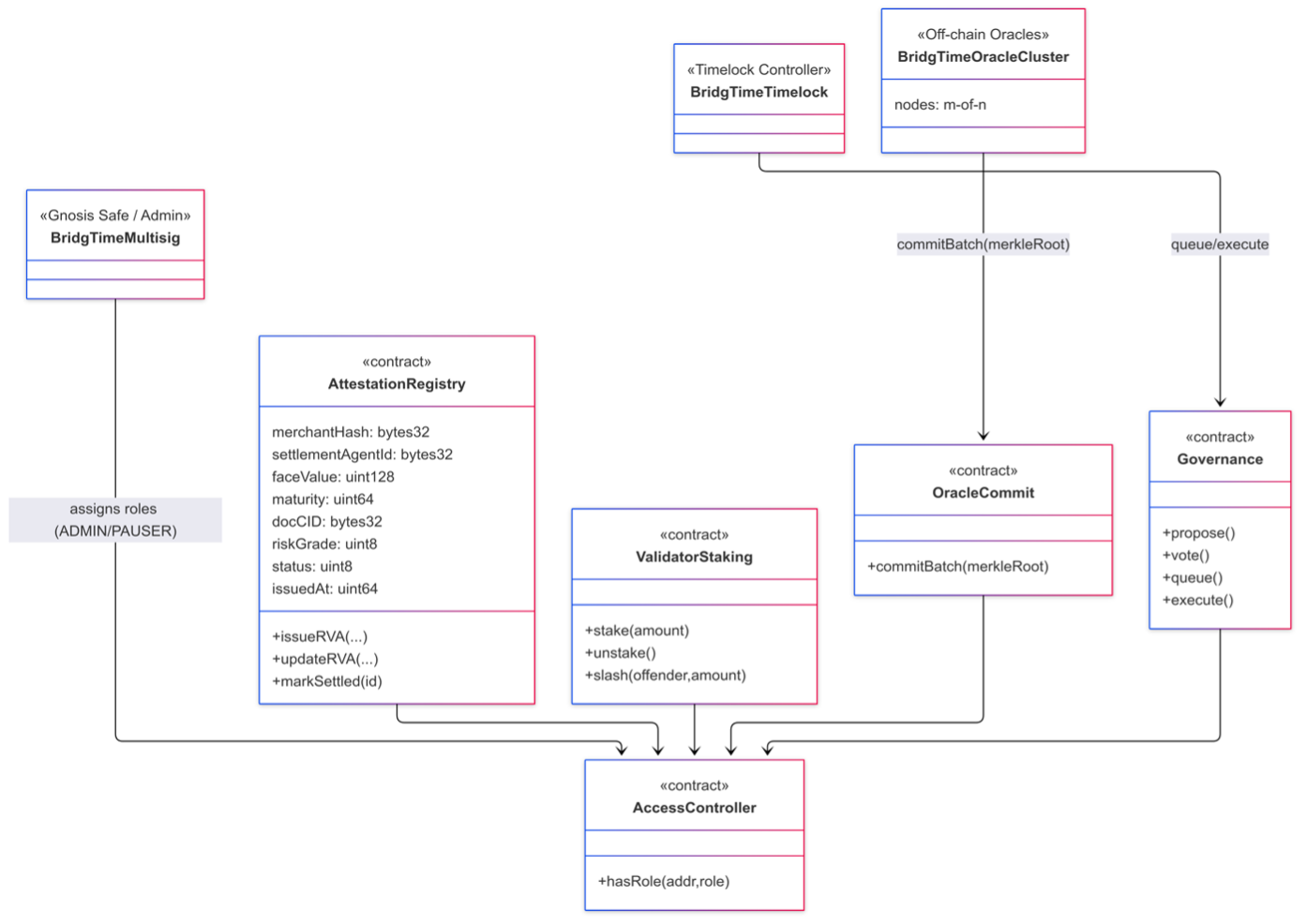

Core on-chain modules (intent):

– AttestationRegistry: issue/update/revoke RVA; state machine from CREATED to VERIFIED to

(EXPIRED, REVOKED, or UPDATED).

– ValidatorStaking: staking and slashing for oracle/validator sets; unbonding periods and

slash policies configured by governance.

– OracleCommit: batch Merkle-root commits after m-of-n consensus across data sources.

– Governance: proposal → vote → timelock → execute for operating parameters and oracle sets.

– AccessController: role-based access (ADMIN / ORACLE / VALIDATOR / AUDITOR / PAUSER).

RVA events on-chain are status metadata only. They do not themselves trigger payments, assign receivables, or

grant any direct rights in the underlying assets.

Sample: AttestationRegistry.sol

// SPDX-License-Identifier: MIT

pragma solidity ^0.8.20;

interface IAccess {

function hasRole(bytes32 role, address who) external view returns (bool);

}

contract AttestationRegistry {

enum Status { CREATED, VERIFIED, SHARED, UPDATED, REVOKED, EXPIRED }

struct RVA {

bytes32 merchantHash;

bytes32 settlementAgentId;

uint128 faceValue;

uint64 maturity;

bytes32 docCID;

uint8 riskBand;

Status status;

uint64 issuedAt;

}

bytes32 public constant ROLE_GOV = keccak256("GOV");

IAccess public access;

uint256 public nextId;

mapping(uint256=>RVA> public rvas;

event Issued(

uint256 id,

bytes32 merchantHash,

uint128 faceValue,

uint8 riskBand,

Status status

);

event Updated(

uint256 id,

uint8 riskBand,

Status status

);

event Shared(

uint256 id,

bytes32 lfpKey,

bytes32 scope

);

modifier onlyGov() {

require(access.hasRole(ROLE_GOV, msg.sender), "not-gov");

_;

}

constructor(address access_) {

access = IAccess(access_);

}

function issueRVA(

bytes32 merchantHash,

bytes32 settlementAgentId,

uint128 faceValue,

uint64 maturity,

bytes32 docCID,

uint8 riskBand

) external onlyGov returns (uint256 id) {

id = nextId++;

rvas[id] = RVA({

merchantHash: merchantHash,

settlementAgentId: settlementAgentId,

faceValue: faceValue,

maturity: maturity,

docCID: docCID,

riskBand: riskBand,

status: Status.CREATED,

issuedAt: uint64(block.timestamp)

});

emit Issued(id, merchantHash, faceValue, riskBand, Status.CREATED);

}

function updateRVA(

uint256 id,

uint8 riskBand,

Status status

) external onlyGov {

RVA storage r = rvas[id];

require(r.issuedAt != 0, "not-found");

r.riskBand = riskBand;

r.status = status;

emit Updated(id, riskBand, status);

}

function shareRVA(

uint256 id,

bytes32 lfpKey,

bytes32 scope

) external onlyGov {

RVA storage r = rvas[id];

require(r.issuedAt != 0, "not-found");

r.status = Status.SHARED;

emit Shared(id, lfpKey, scope);

}

}

RVA attestation lifecycle (state diagram)

stateDiagram-v2

[*] --> CREATED

CREATED --> VERIFIED: Oracle batch\nsign and commit

VERIFIED --> SHARED: LFP pulls RVA\nor merchant shares

SHARED --> UPDATED: New settlement\ndata or corrections

UPDATED --> VERIFIED: Re-verified by\noracle set

SHARED --> REVOKED: Fraud or\ncritical error

UPDATED --> REVOKED

VERIFIED --> EXPIRED: Past\nvalidity window

SHARED --> EXPIRED: LFP window\nclosed

REVOKED --> [*]

EXPIRED --> [*]

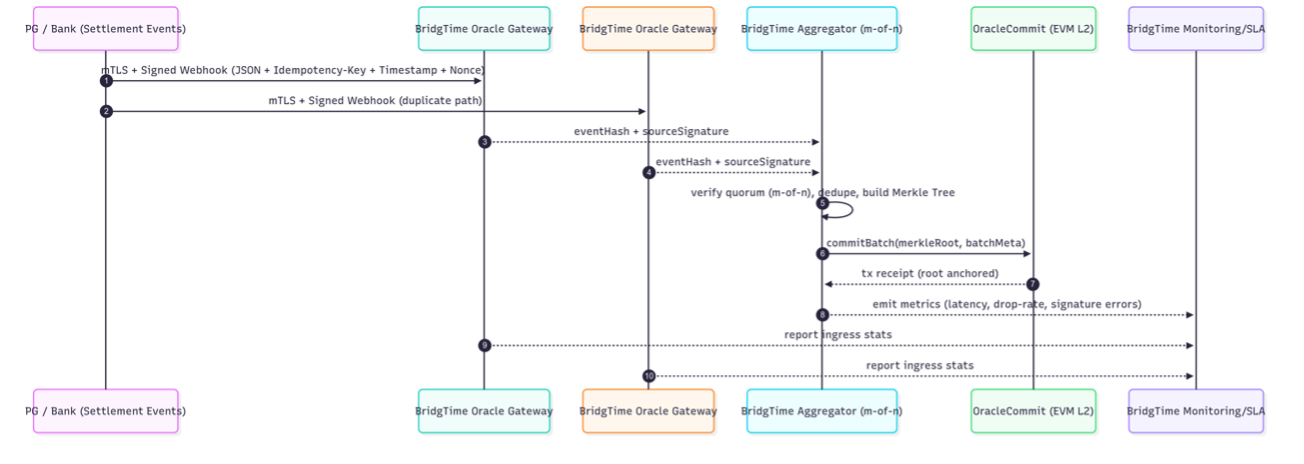

• Ingress: receive settlement events from PGs and banks over mTLS with HTTP-signature or HMAC. Require an

Idempotency-Key, Timestamp, and Nonce; reject requests beyond an allowed clock-skew window.

• Deduplication & consistency: event-hash plus idempotency keys ensure exactly-once processing

across active-active gateways.

• m-of-n & batch commits: aggregate distinct source signatures, verify quorum, then commit a

Merkle root to OracleCommit every N seconds.

• SLA & incident handling: monitor p95 latency, drop rates, and signature errors; alerting,

failover routing, retries, dead-letter queues, and priority queues for critical tenants.

• Keys & security: HSM/KMS-backed keys for oracle and gateway identities; RBAC/ABAC, IP allowlists,

WAF and rate limits, mTLS between internal services; full audit into OpenTelemetry/SIEM.

• On-chain minimization: commit only Merkle roots, batch metadata, and signer-set IDs. Original

settlement events remain encrypted off-chain and are referenced by CID or internal IDs.

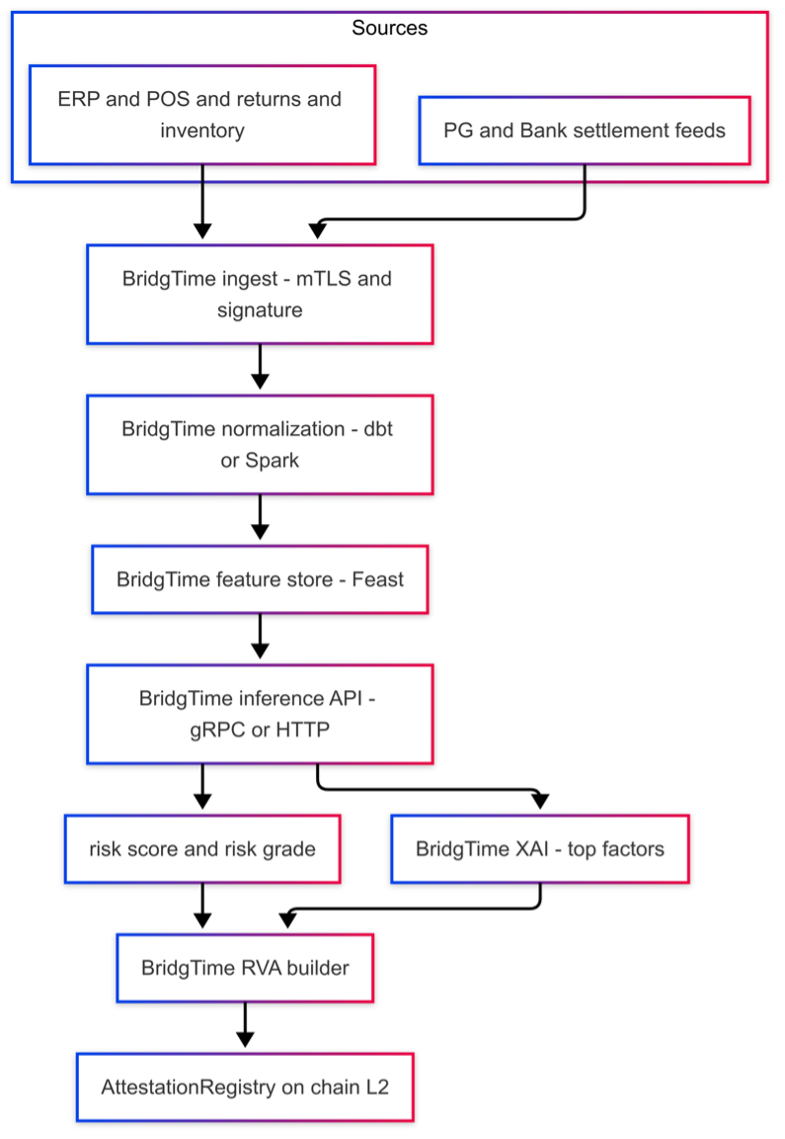

The AI Risk Engine is designed as a pragmatic triage layer for LFP credit teams. It does not attempt to be a

perfect classifier; instead, it reduces manual workload while keeping final decisions with human analysts.

Ingest & normalization

• Secure intake of PG and bank settlement events plus ERP/POS sales, refunds, and inventory via mTLS and

request signing.

• Streaming via Kafka/Redpanda into a partitioned data lake (S3/GCS) with strong schema and quality checks

(dbt/Spark).

• Standardization into a common settlement schema so that PG-specific quirks do not leak into models.

Feature management

• Online/offline feature store (for example Feast) serving features such as:

– rolling 7-day deviation between card-scheme and PG settlement amounts,

– channel-mix weighted margin shifts,

– settlement latency distributions by scheme and channel,

– reversal and chargeback ratios per SKU, branch, or time-of-day,

– merchant “health” composites (ticket size, frequency, variability).

• Full lineage and versioning for reproducible risk decisions.

Models & performance (PoC snapshot)

In PoC environments combining synthetic and anonymized real traces, the engine is tuned around three buckets:

Normal, Delayed but explainable, and Anomalous.

| Class | Precision | Recall | Notes |

|---|---|---|---|

| Normal | ≈95% | ≈96% | Low false positives to avoid noisy escalations. |

| Delayed but explainable | ≈89% | ≈84% | Tagged with reasons (holidays, cut-off shifts, backlog). |

| Anomalous (needs review) | ≈91% | ≈88% | Priority queue for LFP analysts. |

In practice this yields:

• a false-positive rate on “Normal” flows around 2–3%, and

• roughly high-30s to low-40s percent reduction in manual review volumes compared to a simple

rule-based baseline, depending on channel mix and seasonality.

Models & serving

• OCR and mapping models for document-based settlement statements.

• Time-series anomaly detection (for example STL/ESD plus Isolation Forest) for volume, latency, and margin

shifts.

• Gradient-boosted trees plus policy rules to produce a risk score, map it to a grade, and attach reason

codes.

• gRPC/HTTP inference services with a target p95 under 200ms for cached features; batch scoring for

reconciliation windows.

XAI and “why” explanations

• SHAP or permutation-based top-K drivers for each decision, expressed as human-readable reasons such as:

“PG settlement lag vs history”, “elevated refund ratio for SKU cohort”, or “abrupt channel-mix shift”.

• These reasons are injected into RVA risk reports so LFP teams can understand and challenge model outputs.

MLOps & security

• MLflow or W&B tracking, PSI/KS drift monitoring, Docker+K8s with HPA and canary rollouts, and rollbacks on

degradation.

• Feature and label audits; approval workflow for model promotion.

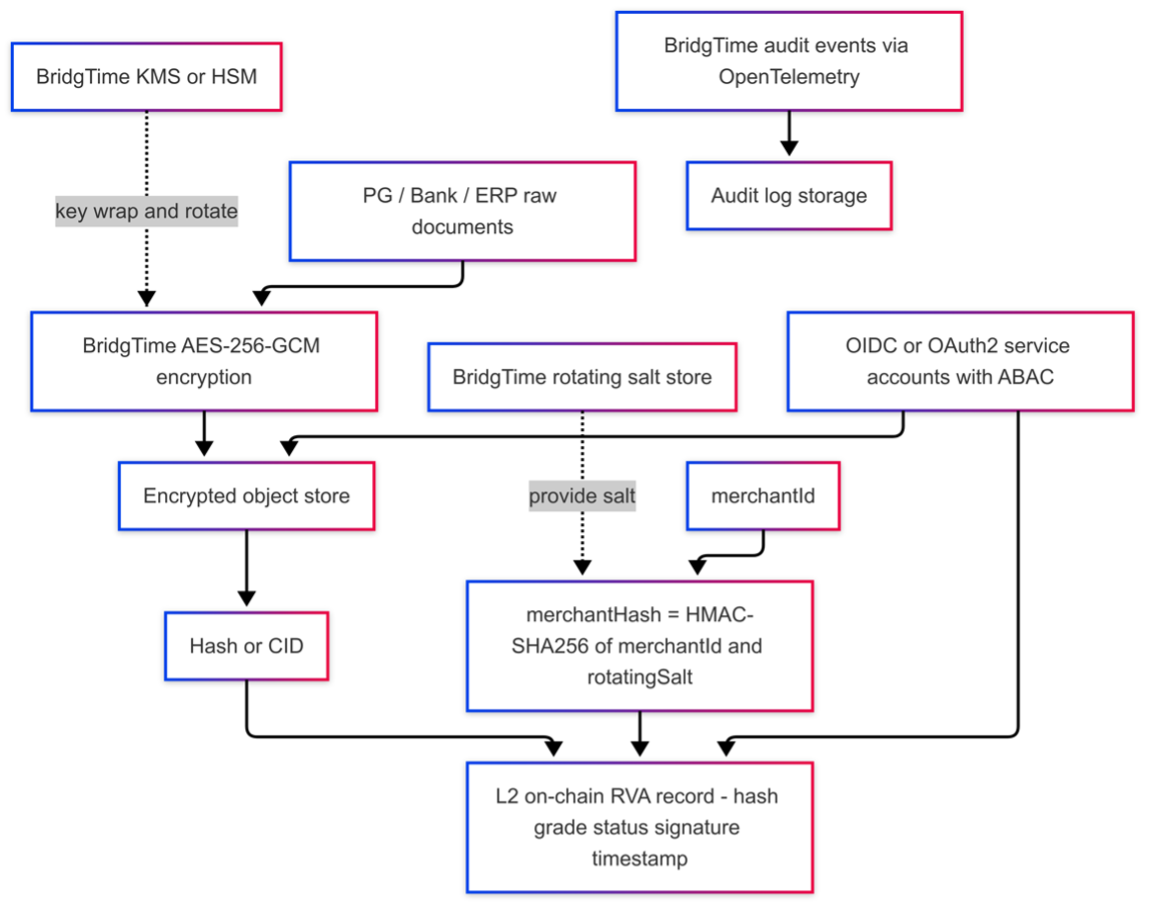

• Off-chain data encrypted with AES-256-GCM, merchant identifiers pseudonymized with HMAC-SHA256 and rotating

salts; access via OIDC/OAuth2 plus RBAC/ABAC.

Ultimately, the AI Risk Engine produces a risk score, grade, and explanation set that flows into the RVA

builder, which then decides which pieces of that information are anchored on-chain and which remain off-chain.

AI Risk Engine pipeline (high-level)

flowchart LR

ING["PG / Bank\nsettlement feeds"] --> NORM["Normalize &\nquality checks"]

NORM --> FEAT["Feature store\n(online / offline)"]

FEAT --> MODEL["AI risk models\n+ rules engine"]

MODEL --> SCORE["Risk score,\ngrade & reasons"]

SCORE --> RVA["RVA builder\n(on / off-chain)"]

• On-chain holds minimal metadata; RVA entries do not create legal rights in receivables. Originals remain

encrypted off-chain (AES-256-GCM) with KMS/HSM-backed key generation, rotation, and disposal.

• merchantHash uses HMAC-SHA256 pseudonymization; a rotating salt is held in the key vault and

never written on-chain.

• Access control blends OIDC/OAuth2 service accounts with fine-grained ABAC; all access is logged via

OpenTelemetry and centralized SIEM.

• Retention and deletion policies apply off-chain; where necessary, deletion receipts can be anchored back to

the chain via hash commitments.

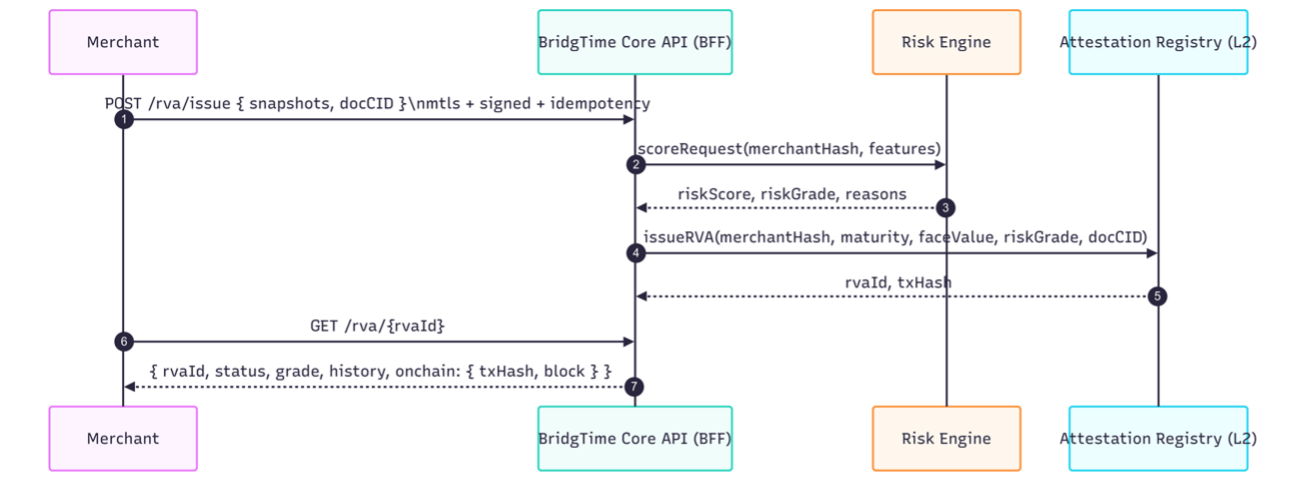

• Unified via a BridgTime Core API (BFF).

• Representative endpoints: onboarding/consent, RVA issue requests, RVA status lookup, and settlement

webhooks for oracles.

• Security: mTLS, HTTP-signature or HMAC, idempotency keys, rate limiting, WAF, and schema validation (JSON

Schema/Protobuf).

• Versioned JSON responses and errors, consistent pagination and filtering.

• Tooling: TypeScript/Go/Python SDKs; sandbox with test vectors, replay tools, and sample subgraph queries.

Note: specific paths, fields, and security parameters may be adjusted per partner or regulatory

requirements.

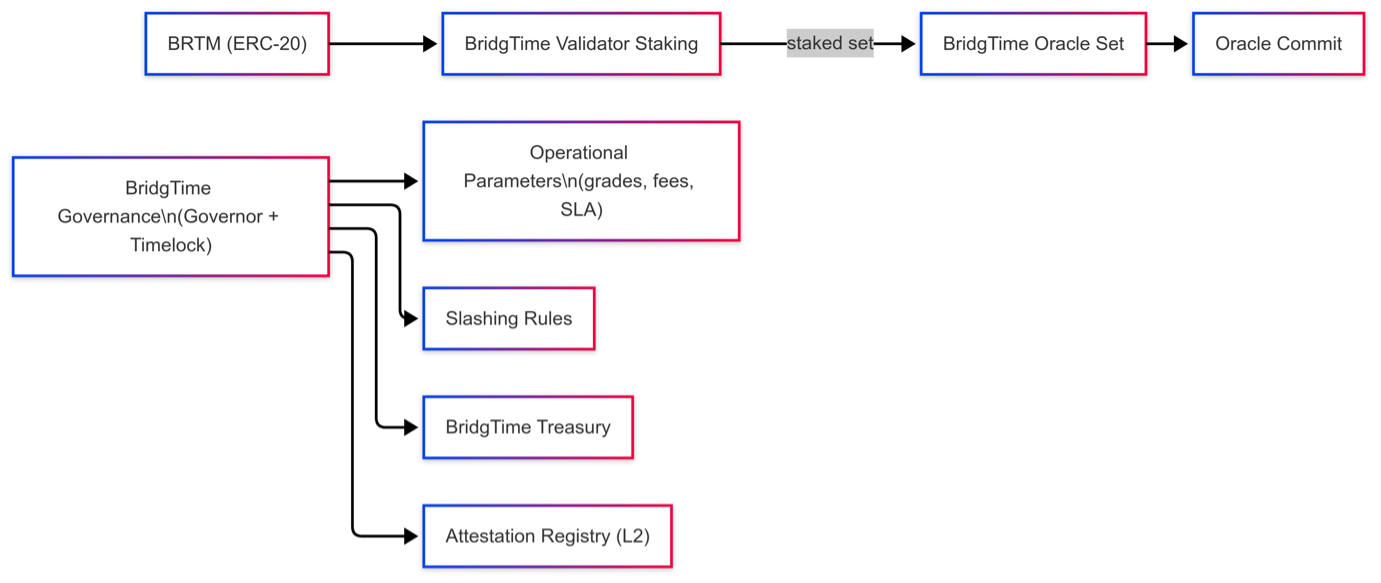

• Purpose & scope: BRTM is a live utility token for access/usage credits, validator/oracle bonding, and

limited participation in operating-parameter governance. It does not provide dividends, revenue share, or redemption

rights.

• Access/fees: optional credits or discounts for dashboards, APIs, and reports. Primary payments are in

fiat or stablecoins; BRTM is an optional convenience rather than a general means of payment or store of value.

• Staking and bonding: operators stake and bond BRTM to participate as oracles or indexers. Unbonding

periods, slashing, and dispute windows are set via governance; non-transferable proof NFTs or SBTs may be used to

represent roles.

• Governance: proposal → vote → timelock → execute for public-scope parameters such as grade tables,

oracle sets, SLA/SLO thresholds, and slashing rules. Over time, control can be migrated from admin keys to

multisig-plus-timelock.

• On/off-chain boundary: only minimal metadata and governance state are on-chain; detailed policy

documents and logs live in off-chain audit storage and are indexed via subgraphs.

• Notice: BRTM is a utility-only token. Policies and parameters may be updated, deferred, or halted

based on security, regulatory, or market conditions.

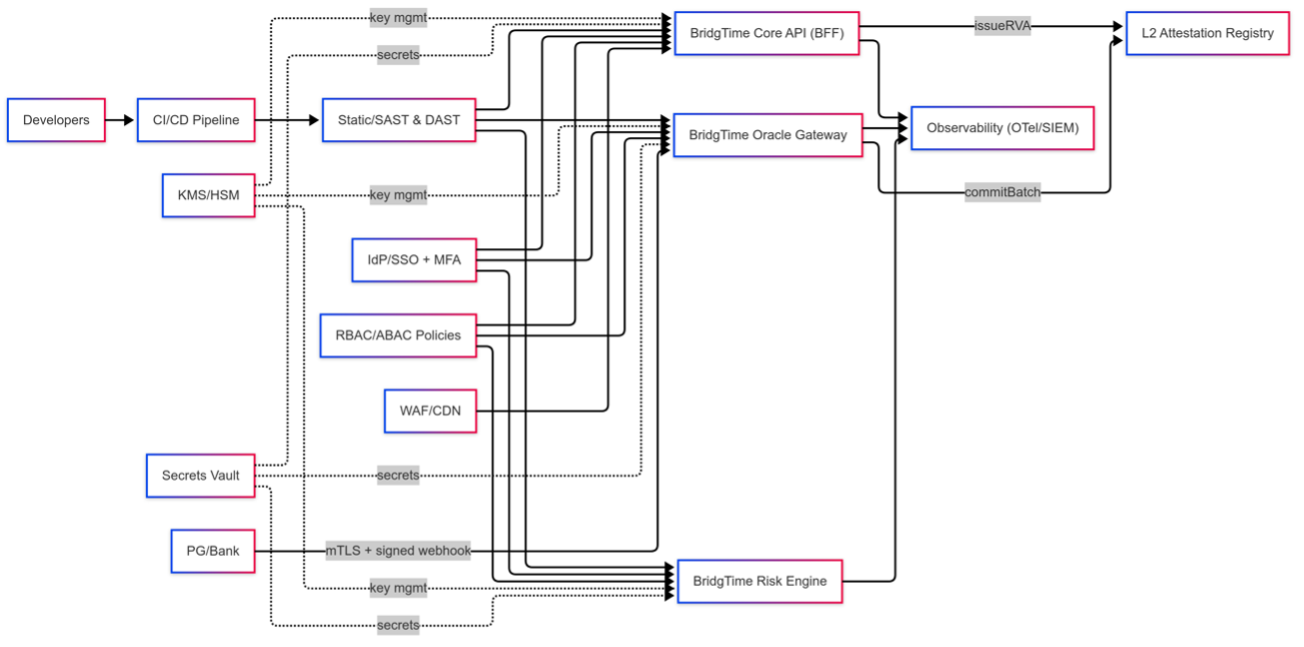

• Keys & secrets: KMS/HSM for signing and data keys; separate key families for on-chain operations

(secp256k1) versus webhook signing (Ed25519/ECDSA). Rotation and rollover audits; dynamic secret leasing for app

services.

• Code & contracts: SAST/DAST, SBOM and dependency scans; at least two external audits plus

fuzz/property testing. Upgradeable proxies only where strictly needed early on, with a plan to reduce mutability

over time; emergency pause plus root-cause analysis.

• Network & access: mTLS and short-lived service tokens; WAF/CDN, rate limits, schema and signature

validation; SSO + MFA for operators; RBAC/ABAC and change control procedures.

• Observability: OpenTelemetry logs, metrics, and traces into a central SIEM; real-time alerts on auth

failures, signature errors, and latency/drop spikes; vulnerability disclosure and bug bounty programs; signed

change logs.

• Data boundary: on-chain holds minimal metadata; encrypted off-chain originals; deletion proofs via

hash anchoring where needed; pseudonymized merchant IDs.

• Operational safety: independent pre-launch audits, multi-region failover, timelocks on sensitive

operations, tested backups and recovery; multisig plus delay for protocol-level changes.

Diagram note: interfaces and connections are subject to adjustment at deployment and as partners

and regulators provide feedback.

For the first 12–24 months, BridgTime focuses on a narrow, executable scope rather than “every possible receivable in every market”. The diagrams and notes below describe what is explicitly in and out of scope, and how the product is expected to evolve from v0 to v2.

In scope vs out of scope (early phases)

flowchart TB

IN_SCOPE["In scope (v0–v1,\nnext 12–18 months)"]

OUT_SCOPE["Out of scope\n(for now)"]

IN_SCOPE --> IN_DATA["Card and PG settlement data\nfor domestic PG A and B"]

IN_SCOPE --> IN_SECTORS["Convenience stores,\npharmacies and similar retail"]

IN_SCOPE --> IN_COUNTRY["Single country\n(single currency)"]

IN_SCOPE --> IN_USE["RVA reports and\nLFP-facing dashboards"]

OUT_SCOPE --> OUT_CASH["Cash sales and\noffline-only revenue"]

OUT_SCOPE --> OUT_B2C["B2C BNPL and\nconsumer credit products"]

OUT_SCOPE --> OUT_FOREIGN["Foreign PGs and\nmulti-currency routing"]

OUT_SCOPE --> OUT_EXEC["Direct execution of\nBNPL or card loans"]

class IN_SCOPE in;

class OUT_SCOPE out;

v0 (0–6 months)

• Data sources: PG A settlement exports, a single ERP connector.

• Channels: convenience and pharmacy chains in one country.

• LFP features: RVA PDF/CSV reports only (no direct limit automation).

• On-chain: RVA status plus minimal hash; no per-branch breakdown.

v1 (6–12 months)

• Data sources: PG A plus card scheme files; ERP plus POS summaries.

• Channels: convenience, pharmacy, and selected franchise retail.

• LFP features: basic limit-setting UI, anomaly alerts, and portfolio views.

• On-chain: RVA status plus risk band and validity window.

v2 (12–24 months)

• Data sources: multi-PG, multi-country, FX-aware normalization.

• Channels: additional verticals (QSR, fuel, logistics where applicable).

• LFP features: richer risk scores, marketplace-style matching, and API hooks into credit engines.

• On-chain: extended metadata for audit and more granular attestation types.

The initial product focuses on a single core persona: the finance user at a convenience store supplier. Their end-to-end journey with BridgTime is: connect PG accounts, let the system build RVA candidates, review only exceptions, and selectively share RVA bundles with financing partners.

flowchart LR

U["👩💼 Supplier finance\n(convenience)"]

CONNECT["Connect PG account\nor upload settlement file"]

INGEST["System ingests data\nand builds RVA candidates"]

REVIEW["Review exceptions\nand flagged batches"]

SHARE["Click 'Share with LFP'\nfor selected RVA"]

LFP_VIEW["LFP receives RVA\nsummary and risk report"]

U --> CONNECT --> INGEST --> REVIEW --> SHARE --> LFP_VIEW

At each step:

• Connect: the user links PG A credentials or drops a CSV/XLS into the BridgTime UI.

• Ingest: BridgTime normalizes and reconciles the feed, generating draft RVA batches.

• Review: the UI shows a prioritized list of anomalies and exceptions instead of every single batch.

• Share: for selected RVAs, the user clicks “Share with LFP” to grant read access and send a summary

package.

• LFP view: the LFP sees a compact RVA summary, risk grade, and reasons, and can decide on limits or

prepayment.

A single RVA cycle involves four main actors. The diagram summarizes what each of them actually has to do in early versions of BridgTime.

flowchart TB

M["🏪 Merchant /\nSupplier finance"]

PG["🏦 PG /\nAcquirer"]

ERP["🧾 ERP /\nBackoffice"]

LFP["💳 Licensed\nFinancing Partner"]

M --> M_TASKS["• Connect PG account\n• Review exceptions\n• Approve RVA sharing"]

PG --> PG_TASKS["• Enable connector\n• Deliver settlement logs\n• Maintain schemas"]

ERP --> ERP_TASKS["• Map fields to RVA\n• Provide sales/returns\n• Support reconciliations"]

LFP --> LFP_TASKS["• View RVA and risk\n• Set limits and alerts\n• Decide prepayment"]

;

class M,PG,ERP,LFP role;

class M_TASKS,PG_TASKS,ERP_TASKS,LFP_TASKS task;

• Merchant / Supplier finance: connects PG accounts, reviews exceptions the engine flags, and approves

whether an RVA bundle can be shared with an LFP.

• PG / Acquirer: installs and maintains the connector, delivers settlement logs, and keeps schemas in

sync with BridgTime's standard.

• ERP / Backoffice: maps internal fields to the RVA schema, provides sales and returns streams, and

supports reconciliation workflows.

• LFP: consumes RVA and risk summaries, configures limits and alert thresholds, and ultimately decides

on prepayment and credit offers.